See THIS POST

Notice- the 2,000 upvotes?

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

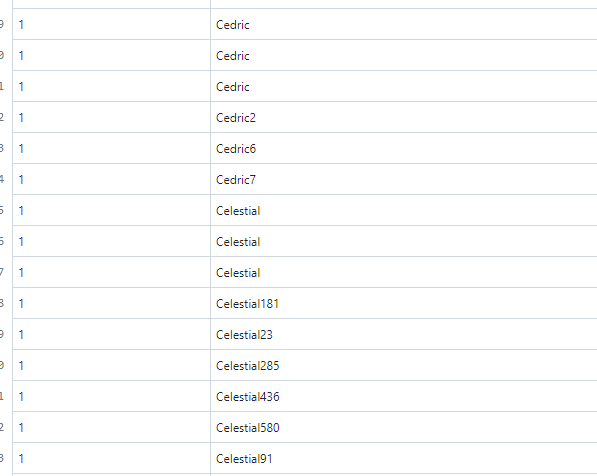

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

Lol well it was fun while it lasted! Man there are some really greedy assholes out there.

There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

The people doing the development seem to have zero concern that their all the major servers are crashing with nginx 500 errors on their front page under routine moderate loads, nothing close to a major website. There is no concern to alert operators of internal federation failures, etc.

I am only able to fetch this data directly from the database.

I too had to resort to this, and published an open source tool - primitive and non-elegant, to try and get something out there for server operators: !lemmy_helper@lemmy.ml

Thanks, I’ll take a look at that one.

I you have SQL statements to share, please do. Ill toss them into the app.

I believe you already saw my post yesterday, for auditing comments, voting history, and post history, right?

The place feels different today than it did just a couple of days ago, and it positively reeks of bots.

I’m seeing far fewer original posts and far more links to karma-farmer quality pabulum, all of which pretty much instantly somehow get hundreds of upvotes.

The bots are here. And they’re circlejerking.

If you always had e-mail verification turned on then you can get rid of some of these junk sign-ups relatively easy, I wrote a guide for it here: https://lemdit.com/post/16430

From what I’ve seen, most of the bot sign-ups that are swelling instance User numbers wouldn’t have passed e-mail verification. I think it was done mostly to prove a point, rather than an attempt to actually use those accounts.

Instances that didn’t have e-mail verification turned on are in a much harder spot.

Hey look, it’s me in the picture! What a waste of my 15 minutes of fame

IMO long term Lemmy needs to move away from upvotes as a measure of interest and activity. That’s too easy to manipulate.

Perhaps comment activity and interaction metrics would be better.

I dont have much to add other than I am an experienced admin and was dismayed at how vulnerable Lemmy is. Having an option to have open registrations with no checks is not great. No serious platform would allow that.

I dont know of a bulletproof way to weed put the bad actors, but a voting system that Lemmy can leverage, with a minimum reputation in order to stay federated might work. This would require some changes that I’m not sure the devs can or would make. Without any protection in place, people will get frustrated and abandon Lemmy. I would.

When I made a post saying that 90% (now ~95%) of accounts on lemmy are bots the amount of people saying that there’s no proof and/or saying to me that there’s a lot of people joining from reddit right now was astonishing.

Edit: one person said me that noone would make 1.6mln bots when there are only 150k-200k users on the platform, like WTF.

Another thing is people are likely pre-creating bot accounts and then sitting in them in case additional protections are created…

The problem is, these accounts look to us just like any new user, lurking around getting a feel for the place - there’s no way to distinguish them until they start this bots acting in some fashion

- Hiring kids in africa and india to create accounts for 2 cents an hour.

Heads up that this depends on the operation size. Captchas are a solved problem. Commercial software exists that can solve Captchas automatically. You migrate from pay on demand services to computer vision software when it’s financially beneficial.

Computers are cheaper and better at solving Captchas than humans atm, and it doesn’t look like that’s going to change any time soon. As long as you pay attention to your proxies, it’s rare to see solution attempts fail. Some pay on demand services no longer employ people.

Computers are cheaper and better at solving Captchas than humans atm

This is hilarious

Hilarious and true

This is troubling.

At least we have the data though, hopefully these findings are useful for updating the Fediseer/Overseer so we can more easily detect bots

I really wish we would have a good data scientist, or ML individual jump in this thread.

I can easily dig through data, I can easily dig through code- but, someone who could perform intelligent anomaly detection would be a god-send right now.

There are data scientist around and we are monitoring where this goes.

Bigest problem I currently see is how to effectively share data but preserve privacy. Can this be solved without sharing emails and ip addresses or would that be necessary? Maybe securely hashing emails and ip addresses is enough, but that would hide some important data.

Should that be shared only with trusted users?

Can we create dataset where humans would identify bots and than share with larger community (like kaggle), to help us with ideas.

There are options and will be built, just jt can not happen in few days. People are working non stop to fix (currently) more important issues.

Be patient, collect the data and let’s work on solution.

And let’s be nice to each others, we all have similar goals here.

Honestly, I’m interested to see how the federation handles this problem. Thank you for all the attention you’re bringing to it.

My fear is that we might overcorrect by becoming too defederation-happy, which is a fear it seems that you share. However I disagree with your assertion that the federation model is more risky than conventional Reddit-like models. Instance owners have just as many tools (more, in fact) as Reddit does to combat bots on their instance. Plus we have the nuke-from-orbit defederation option.

Since it seems like most of these bots are coming from established instances (rather than spoofing their own), I agree with you that the right approach seems to be for instance mods to maintain stricter signups (captcha, email verification, application, or other original methods). My hope is that federation will naturally lead to a “survival of the fittest” where more bot-ridden instances will copy the methods of the less bot-ridden instances.

I think an instance should only consider defederation if it’s already being plagued by bot interference from a particular instance. I don’t think defederation should be a pre-emptive action.

Honestly, I’m interested to see how the federation handles this problem.

Ditto. Perhaps we’re going to see a new solution for an old problem.