Of the four features it claims are new in ES2023, the first two are false (but plausible, I suppose), the third is nonsensical (It’s just destructuring), and the last feature was released in ES2017.

And yet people will blindly trust AI

hallucinatedgenerated results…To be fair, it’s no worse than articles some people write on those nonsense websites.

Everyone freaking out that bad AI content is going to ruin the internet, when the internet was already ruined long ago by terrible human generated content.

The biggest problem is that now it will be mass generated with little effort. Time to abandon Google if most of the web becomes ChatGPT generated articles. Better to talk to ChatGPT directly.

Since about 6 months ago I’m doing the classical “read the official documentation and changelogs”, even if it’s as bad as Microsoft’s (their changelogs are good though). I guess we will enter a new era of RTFM while AI is maturing and we find methods for filtering AI generated noise.

Looking at it optimistically, maybe we’ll start seeing some improvements in documentation as everything else becomes useless.

Looking at it pessimistically the documentation is simply going to be gpt reading the latest code commits and breaking down the changes in a “documented changes” doc lol.

Maybe a silly question: how do we know this was generated with GPT?

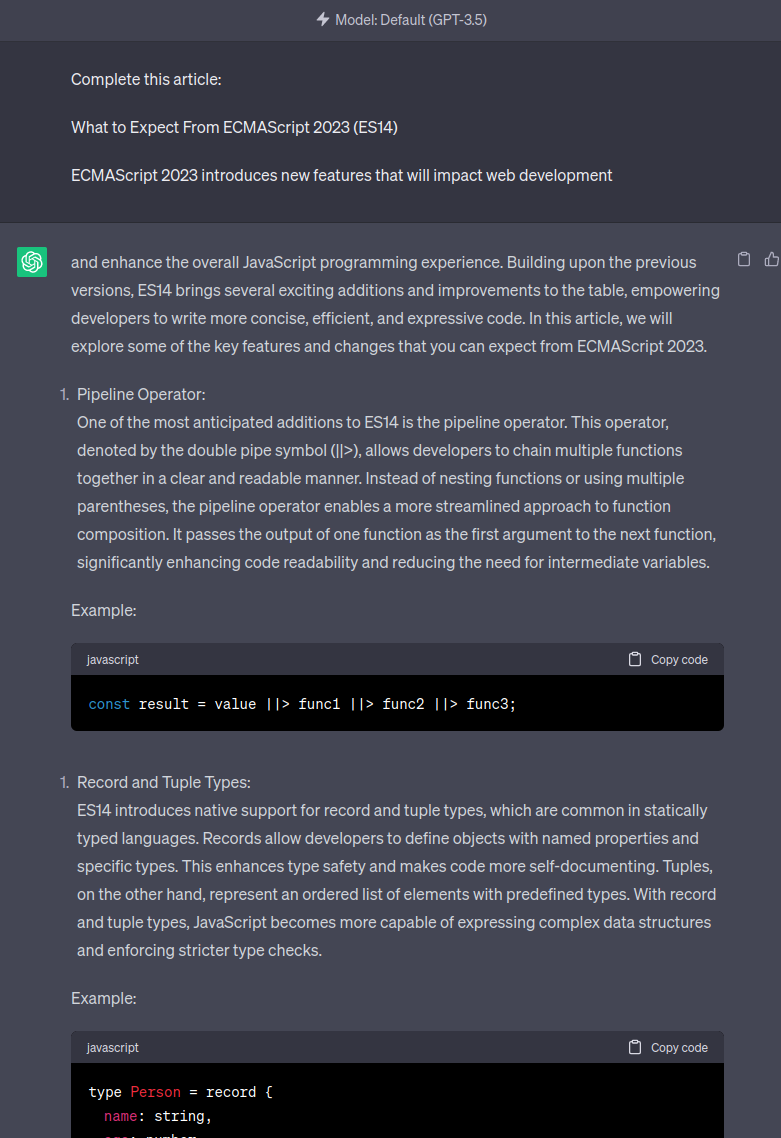

The current versions of ChatGPT are quite stable in their outputs. If I enter the title and subtitle of this article, it completes it with very similar results:

Ha! Nice spotting.